Customer challenges

Our client faced significant challenges in efficiently analyzing large volumes of video data, which required complex processing and identification of specific detection area points within the videos. The existing systems lacked scalability and struggled to handle the increasing demands for video analysis.

Solutions

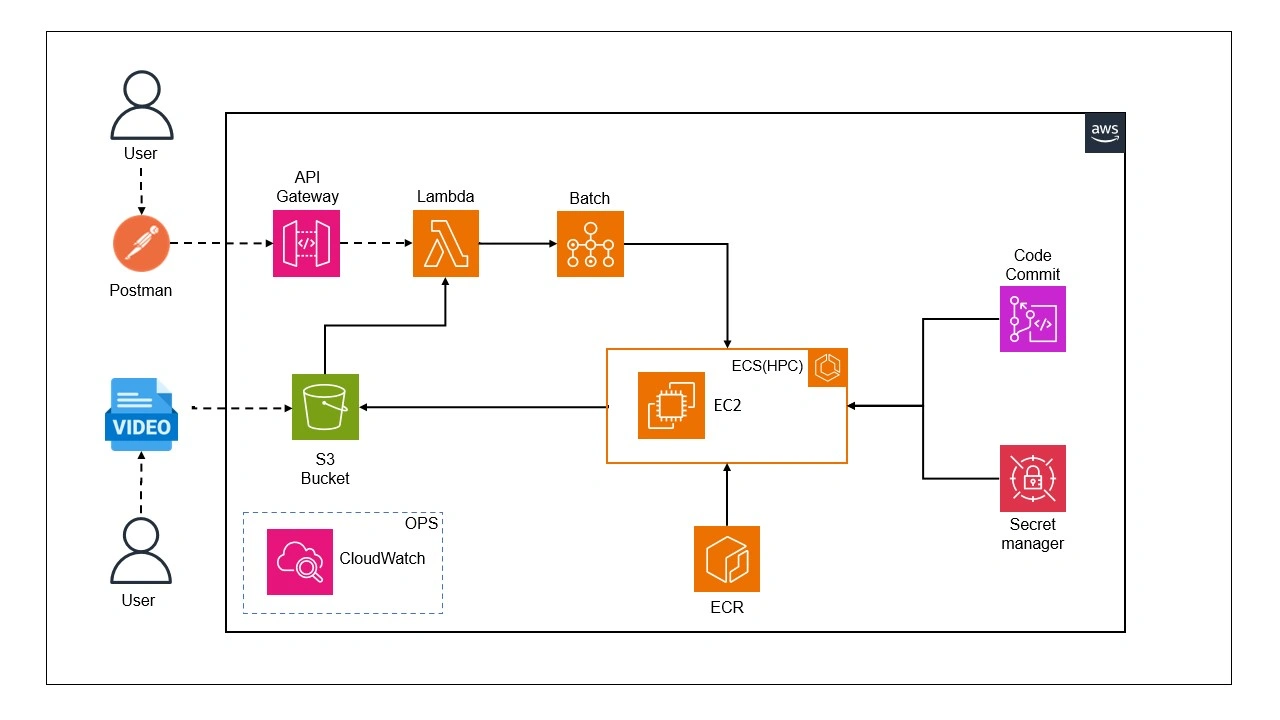

Flask Application on EC2

To address these challenges, we implemented a Flask application hosted on an EC2 server, providing a user-friendly interface for seamless video upload and management.

S3 Bucket Integration

Upon submission, videos are automatically transported to an S3 bucket, triggering a series of automated processes orchestrated by AWS services.

Lambda Function Activation

The activation of an S3 trigger invokes a Lambda function, acting as a catalyst for initiating a highly efficient batch job tailored for video processing.

Docker Images in AWS ECR

Leveraging Docker images stored in AWS ECR, we ensured that our batch job had all the necessary dependencies, enabling smooth and reliable video processing.

Code Repositories on AWS CodeCommit

Furthermore, our solution integrated with AWS CodeCommit to clone essential code repositories, ensuring our processing pipeline remains robust and up-to-date with the latest developments.

AWS services used

Results

The implementation of our solution yielded significant results:

Streamlined Video Analysis: Our solution streamlined the video analysis process, enabling faster and more accurate identification of detection area points within the videos.

Scalability: The use of AWS resources allowed our system to scale seamlessly, handling large volumes of video data efficiently.

Automated Processing: By automating processes through Lambda functions and batch jobs, we reduced manual intervention and improved overall system efficiency.

Data Organization: Outputs such as clips, images, and CSV files were meticulously organized and stored back in the S3 bucket, enhancing data management and accessibility.